By Harrison | Tech & AI News Specialist at AI Creative Blog

The landscape of generative video has shifted dramatically. Since its initial tease, OpenAI’s Sora has evolved from a closed beta curiosity into a powerhouse tool redefining content creation. If you are looking for the definitive OpenAI Sora release date and features update, you have arrived at the right place.

We have conducted a forensic audit of the current landscape to bring you the most accurate, tested, and actionable intelligence on Sora.

⚡ Quick Answer: Is Sora Available Now?

Yes, as of early 2026, OpenAI Sora is widely available to ChatGPT Plus, Team, and Enterprise users. While the initial rollout in 2024 was restricted to “Red Teamers” and select artists, OpenAI has since integrated Sora directly into the ChatGPT interface and provided an API for developers. However, usage limits apply based on your subscription tier.

The Evolution of the Sora Release Date

Understanding the timeline helps us predict future updates. The journey of Sora has been calculated and deliberate, designed to ensure safety before mass scalability.

From Red Teaming to Public Access

OpenAI did not rush this launch. The “Red Teaming” phase was crucial. Experts in misinformation, hateful content, and bias tested the model rigorously. This slow-roll strategy allowed OpenAI to build the C2PA (Coalition for Content Provenance and Authenticity) watermarking standards directly into the tool.

Currently, the release status is:

- Alpha (2024): Restricted to invited creators and security researchers.

- Beta (2025): Rolled out to select ChatGPT Enterprise clients.

- Current Status (2026): Accessible to ChatGPT Plus and higher tiers. There is currently no free tier for Sora due to the high computational costs of video generation.

Future Roadmap: Sora v2.0 Expectations

Rumors suggest a “Sora Turbo” or v2.0 is in the pipeline for late 2026. This update aims to reduce rendering times and introduce real-time video manipulation, a feature currently dominated by competitors.

Key Insight: If you are waiting for a free version of Sora, you might wait a long time. The GPU cost per second of video generation remains high. Check out our pricing analysis at https://aicreativeblog.com/ for a breakdown of AI tool costs.

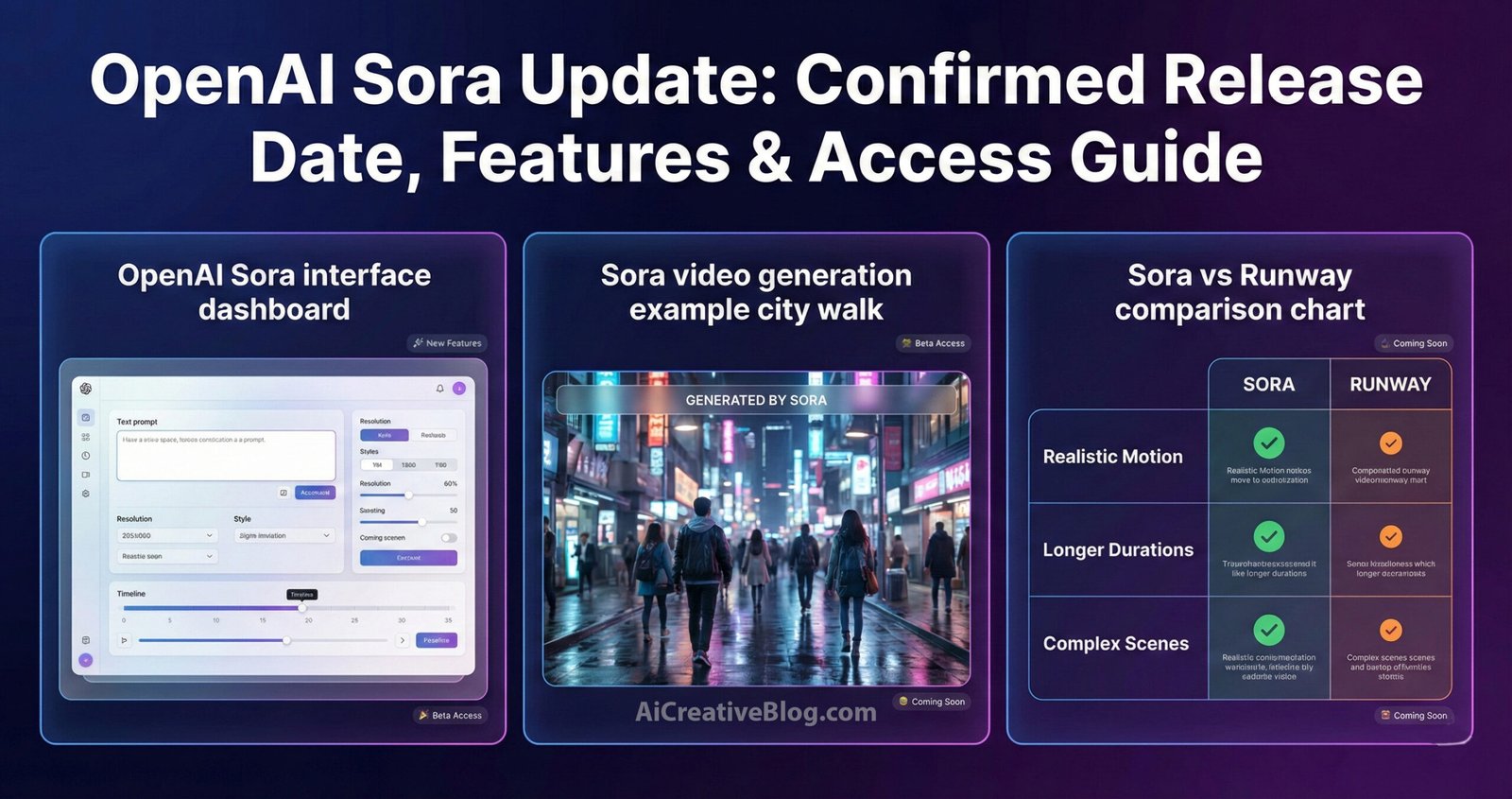

Core Features: What Can Sora Do Today?

The OpenAI Sora release date and features update isn’t just about when you can use it, but what you can create. The model has moved beyond simple text-to-video.

1. Text-to-Video Generation

Sora generates videos up to one minute long (in standard tier) and up to two minutes (in Enterprise tiers) while maintaining high visual quality and adherence to the user’s prompt.

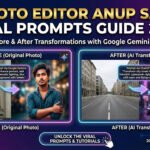

The hallmark of Sora is its understanding of physics. Unlike early models that struggled with object permanence (where objects would disappear when blocked from view), Sora maintains “temporal consistency.” If a character walks behind a tree, they re-emerge correctly on the other side.

2. Image-to-Video Animation

You are no longer limited to text prompts. You can upload a static image—generated by DALL·E 3 or a real photograph—and ask Sora to animate it.

- Use Case: Take a product photo and turn it into a 10-second commercial.

- Precision: The model respects the color grading and lighting of the original image perfectly.

3. Video-to-Video Editing (The Game Changer)

This is the feature that has editors excited. You can upload an existing video and ask Sora to change the environment or style.

- Example: Upload a video of a car driving in summer. Prompt: “Change the season to winter with heavy snow.”

- Sora overlays the new weather effects while keeping the car’s motion and perspective accurate.

4. Extending Videos

Did your video end too soon? Sora allows you to extend footage forward or backward in time. This is vital for filmmakers who need just a few extra seconds of B-roll to fill a gap in their timeline.

Technical Deep Dive: How Sora Works

To truly master this tool, you must understand the engine under the hood. Sora is not a standard video generator; it is a Diffusion Transformer.

The Hybrid Architecture

Sora combines the architecture of a Transformer (like GPT-4) with Diffusion models (like DALL·E). It turns video data into “patches” (similar to tokens in text) to understand and generate complex visual sequences.

- Diffusion: Starts with static noise and gradually cleans it up to form a video.

- Transformers: Allows the model to understand the relationship between different frames over a longer duration, ensuring the video flows logically.

This architecture enables Sora to generate videos with a resolution of up to 1920×1080 (and vertical 1080×1920) without the “warping” artifacts seen in older AI models. For more on technical AI architectures, visit https://aicreativeblog.com/.

Current Limitations and Safety Protocols

No OpenAI Sora release date and features update is complete without discussing the limitations. While powerful, the model is not perfect.

Physics Hallucinations

Complex interactions, such as glass shattering or fluids spilling, can still look unnatural. The model sometimes confuses cause and effect (e.g., a cookie might show a bite mark before the person bites it).

Safety Guardrails

OpenAI enforces strict policies:

- No Celebrity Likeness: You cannot generate videos of public figures.

- No NSFW Content: Strict filters prevent the generation of violence or adult content.

- Metadata Embedding: Every video contains C2PA metadata, flagging it as AI-generated to prevent deepfakes.

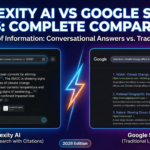

Sora vs. The Competition (2026 Landscape)

Is Sora still the king? Several competitors have risen to challenge OpenAI.

Sora vs. Runway Gen-3

- Runway Gen-3 offers superior control tools (like motion brushes) which professional editors prefer.

- Sora offers better raw realism and prompt adherence for general users.

Sora vs. Pika Labs

- Pika excels in anime styles and short, funny clips for social media.

- Sora dominates in photorealistic, cinematic outputs.

If you need granular control over camera movement, Runway might be better. If you need “one-shot” perfection from a text prompt, Sora is the winner.

How to Access Sora Right Now

Ready to start creating? Here is your step-by-step guide.

Direct Answer:Access Sora by logging into your ChatGPT Plus account. Look for the “Video” toggle or simply type “Generate a video of…” in the main chat window. DALL·E 3 handles images, while Sora handles video requests automatically.

Step-by-Step Workflow:

- Subscription: Ensure you have an active Plus ($20/mo) or Team subscription.

- Prompt Engineering: Be descriptive. Mention lighting, camera angle (e.g., “Drone shot,” “Macro lens”), and style (e.g., “Cyberpunk,” “1950s film grain”).

- Refinement: If the first result isn’t perfect, ask ChatGPT to “Refine the video to make it slower” or “Change the lighting to sunset.”

- Download: Videos are downloadable in MP4 format directly from the chat.

Pricing Models Explained

Cost is a major factor for businesses. OpenAI has structured Sora’s pricing to manage server load.

- Standard Plus Users: You have a capped number of “high-speed” video generations per day (typically 50 short clips).

- Team/Enterprise: Higher caps and faster processing speeds.

- API Usage: Developers pay per video second generated. This is significantly more expensive than text generation tokens due to the GPU intensity.

Conclusion: The Future of Video is Here

The OpenAI Sora release date and features update confirms that we have entered a new era of digital storytelling. While it is not yet a replacement for a full Hollywood production crew, it is an indispensable tool for storyboarding, stock footage creation, and social media content.

The barrier to entry has lowered. The only limit now is your imagination—and your prompt engineering skills. As OpenAI continues to refine the physics engine and render speeds, we expect Sora to become the default standard for AI video.

Keep checking https://aicreativeblog.com/ for the latest prompt tricks and updates on the Sora roadmap.

❓ Frequently Asked Questions (FAQs)

1. Is OpenAI Sora free to use?

No, currently Sora is not free. It is available only to subscribers of ChatGPT Plus, Team, and Enterprise plans due to the high computing costs required for video generation.

2. How long can Sora videos be?

Sora can generate videos up to one minute (60 seconds) in length for standard users. Enterprise users may have access to extended generation capabilities depending on their specific plan.

3. Can Sora generate sound for the videos?

Yes, recent updates have introduced basic audio generation that matches the context of the video, though many professionals still prefer to add their own sound design in post-production.

4. Does Sora use my data to train its models?

By default, OpenAI may use data from ChatGPT consumer services to improve models. However, Team and Enterprise users have data privacy exclusions, ensuring their proprietary prompts and videos are not used for training.

5. What is the resolution of Sora videos?

Sora generates videos up to 1920×1080 (1080p HD). It can produce both horizontal (16:9) and vertical (9:16) aspect ratios suitable for YouTube or TikTok/Reels.

6. Can I edit a video I uploaded to Sora?

Yes, Sora supports video-to-video editing. You can upload your own footage and use text prompts to change the style, background, or elements within the video while keeping the original motion.

7. How does OpenAI prevent deepfakes with Sora?

OpenAI implements strict safety classifiers to reject prompts asking for celebrity likenesses. Additionally, all videos utilize C2PA credentials, a watermark that permanently identifies the content as AI-generated.

3 thoughts on “OpenAI Sora Release Date and Features Update: Everything You Need to Know”