By Harrison | Tech & AI News Specialist at AI Creative Blog

For the last two years, video editors have been watching the AI revolution with a mix of excitement and anxiety. We saw the viral clips from OpenAI’s Sora and Runway, but we also asked the boring, practical questions: “Can I actually use this in a client’s commercial without getting sued?” and “Do I have to leave my timeline to use it?”

The Adobe Firefly Video Model launch news changes the conversation. Adobe didn’t try to be the first; they tried to be the safest.

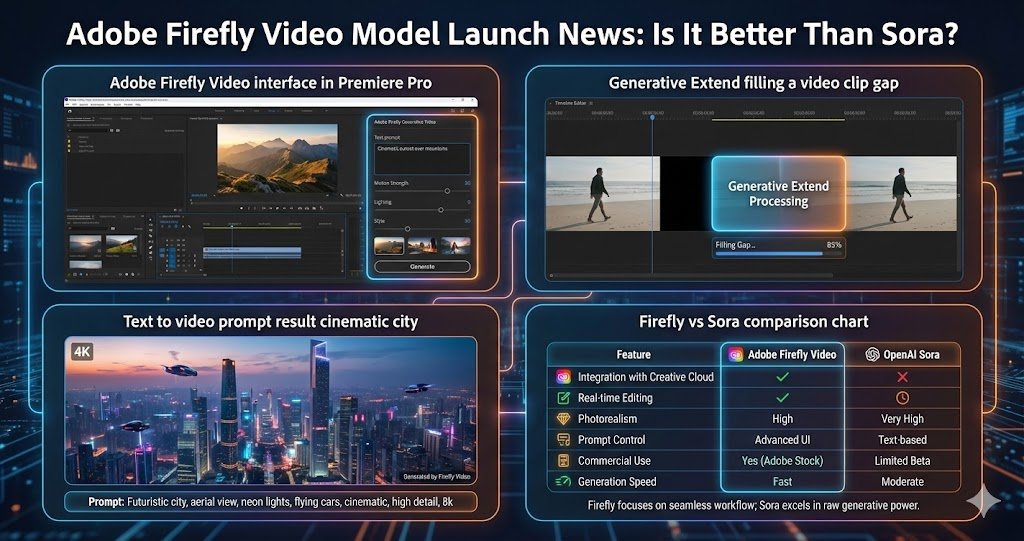

In 2026, the Firefly Video Model isn’t just a website you visit to make funny clips. It is an engine built directly into Premiere Pro and After Effects. It is designed to fix broken shots, extend clips that are too short, and generate B-roll without ever leaving your edit bay.

We tested the model to see if it lives up to the hype, or if it’s just another corporate gimmick.

Quick Answer: Why Should You Care?

The Killer Feature:It’s not just Text-to-Video. It’s Generative Extend. You can drag the edge of a video clip in your Premiere Pro timeline past where the recording stopped, and Firefly will invent the next 2 seconds of footage—matching the lighting, camera movement, and noise perfectly. It is the ultimate “fix it in post” tool.

1. The “Commercial Safety” Promise

Before we talk about quality, we have to talk about copyright. This is Adobe’s biggest weapon against competitors.

Tools like the ones we covered in our OpenAI Sora release date and features update are incredible, but their training data is a “black box.” Did they scrape YouTube? Maybe. For a Disney or Netflix editor, “maybe” isn’t good enough.

Adobe Firefly is different.

- The Data: It is trained exclusively on Adobe Stock footage and public domain content.

- The Guarantee: Adobe creates an “indemnification” clause for enterprise customers. If you get sued for copyright infringement because of a Firefly clip, Adobe pays the legal bills.

- The Vibe: This makes it the only generative video model that is truly “safe” for Super Bowl commercials and Hollywood movies.

2. Core Features: What Can It Do?

The launch brings three distinct capabilities to the Creative Cloud ecosystem.

A. Generative Extend (The Editor’s Best Friend)

We have all been there: the music swell needs 2 more seconds, but the actor stopped walking too soon.

- Old Way: Slow down the clip (looks choppy) or cut away.

- Firefly Way: Click the “Generative Extend” tool, drag the clip out, and wait. The AI analyzes the previous frames and hallucinates the rest.

- Reality Check: It works flawlessly for simple movements (walking, driving). It struggles with complex dialogue or sudden face turns.

B. Text-to-Video

You can type prompts like “Cinematic drone shot of a futuristic Tokyo, neon lights, rainy pavement” and get a 5-second clip.

- Quality: It is clean and high-resolution (up to 4K in some modes). The motion is smoother than early AI models, lacking that “jittery” look.

- Styling: It understands camera terminology (F-stop, focal length) surprisingly well.

C. Image-to-Video

This is massive for concept artists. You can take a still image—generated perhaps by Midjourney v7—and upload it to Firefly to animate it.

- Use Case: Create a character in Midjourney, then use Firefly to make them blink, smile, or look around. It bridges the gap between static art and motion graphics.

3. Integration: It Lives in Your Timeline

The biggest friction point with AI has been “App Fatigue.” You generate a video on Discord, download it, upscale it, convert it, and import it.

Adobe killed the friction.

- Open Premiere Pro.

- Open the “Generative Video” panel.

- Type your prompt.

- The video appears directly in your bin.

This workflow efficiency is similar to how Microsoft Copilot Pro integrates AI directly into Word and Excel. When the tool is right where you work, you use it 10x more often.

4. Firefly vs. Sora vs. Runway (2026 Showdown)

Is Adobe the best? Not necessarily. It depends on what you value.

| Feature | Adobe Firefly Video | OpenAI Sora | Runway Gen-3 |

| Realism | High (Stock footage look) | Extremely High (Cinematic) | High (Artistic control) |

| Safety | 100% Commercial Safe | Grey Area | Grey Area |

| Control | Camera angles, Zoom | Prompt heavy | Motion Brush Tools |

| Workflow | Inside Premiere Pro | Web Interface / API | Web Interface |

Verdict: If you want to make a surreal music video, stick with Sora or Runway. If you are editing a corporate documentary and need a generic shot of “business people shaking hands,” Firefly is superior because it integrates instantly and won’t get you fired by Legal.

5. The “Generative Credits” System (Pricing)

Here is the catch. Video generation is expensive. Adobe uses a “Generative Credits” system.

- The Cost: Rendering a 5-second video clip costs significantly more credits than generating an image.

- The Limits: Most Creative Cloud plans come with a monthly allowance (e.g., 1,000 credits). Once you burn through those, the generation speed slows down aggressively unless you buy a “Pro” add-on pack.

- Comparison: It is similar to the token costs we see in Devin AI for coding tasks. Compute is the new currency.

6. What About Mobile?

Adobe is also pushing a lighter version of these tools to mobile apps like Adobe Express.

While it’s handy, mobile chips still struggle with heavy rendering. For quick, on-device edits without the cloud wait times, the neural engine features in Apple Intelligence (iOS 18) are faster for simple tasks like object removal (“Clean Up”), but for generating new pixels from scratch, Adobe’s cloud power wins.

7. Limitations and “The Uncanny Valley”

We need to be honest in this Adobe Firefly Video Model launch news review. It is not perfect.

- Faces: While better than 2024 models, Firefly still occasionally morphs faces when characters turn their heads quickly.

- Hands: Yes, the “AI hands” problem persists in video, though it is less noticeable in motion.

- Length: Clips are currently capped at 5-10 seconds. You cannot generate a full movie in one click.

For creative industries like fashion, where fabric movement and silhouette are critical, these limitations matter. Dedicated tools often perform better for specific niches—check our guide on best AI fashion sketch to image tools for alternatives that handle clothing physics better than general video models.

Conclusion: The “Boring” Revolution We Needed

Adobe Firefly Video isn’t trying to be the most “viral” AI. It is trying to be the most useful.

By focusing on “Generative Extend” and commercial safety, Adobe has built a tool that fits into existing professional workflows. It solves the boring problems—short clips, missing B-roll, background fills—that actually slow down editors.

If you are a creator, update your Premiere Pro today. The future of editing is here, and it has a “Generate” button.

For help with crafting the perfect text prompts to feed into Firefly, you can adapt the techniques from our Stable Diffusion prompts for realistic portraits guide—lighting and camera angle terminology works universally across AI models.

Frequently Asked Questions (FAQs)

1. Is the Adobe Firefly Video Model free?

It is included in paid Creative Cloud subscriptions (like the “All Apps” or “Premiere Pro” plans), but it uses Generative Credits. You get a set amount per month. If you run out, you can keep generating at slower speeds or pay for more credits.

2. Can I use Firefly Video for commercial client work?

Yes. This is Adobe’s main selling point. Because it is trained on Adobe Stock, it is deemed commercially safe, and enterprise users even get legal indemnification (insurance) against copyright claims.

3. How long are the videos generated by Firefly?

Currently, Text-to-Video and Image-to-Video generations are limited to 5 seconds. Generative Extend can add about 2 seconds to the beginning or end of an existing clip. Adobe plans to increase these limits as server capacity grows.

4. Does it work on Mac and Windows?

Yes, since it is cloud-based, it works on both macOS and Windows versions of Premiere Pro and the web-based Firefly.adobe.com.

5. Can Firefly generate sound?

No. Currently, the Firefly Video Model generates video only (silent). You will need to add sound effects and music separately. Adobe has separate “Project Music GenAI Control” tools in beta for audio generation.

6. How does “Generative Extend” work?

It analyzes the pixels in the last few frames of your video clip and predicts what should happen next. It is perfect for holding a shot longer to let a voiceover finish, or smoothing out a jump cut.

7. Is the video quality 4K?

Adobe supports up to 1080p and 4K output, but 4K generation consumes significantly more credits and takes longer to render. Most users currently stick to 1080p for speed.