By Wright | Content Strategy Specialist at AI Creative Blog

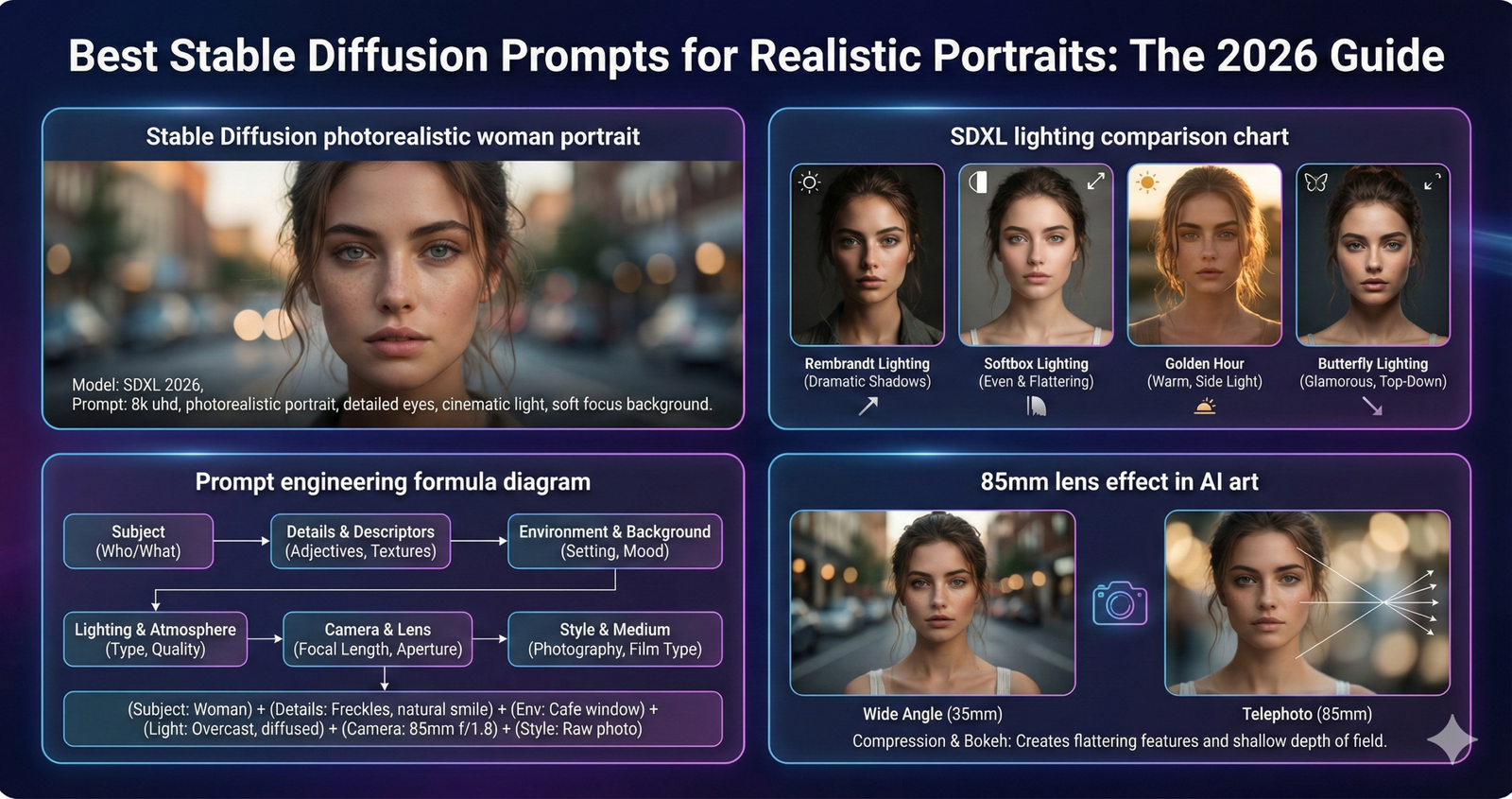

We have all been there. You type “beautiful woman” into Stable Diffusion, and the result looks like a plastic mannequin from a 2005 video game. The skin is too smooth, the eyes are dead, and the lighting is flat.

In 2026, Stable Diffusion prompts for realistic portraits are an art form. With the release of SD 3.5 and advanced SDXL fine-tunes like Juggernaut and RealVis, the barrier between AI and photography has dissolved—but only if you know how to speak the machine’s language.

This isn’t just a list of random words. This is a forensic breakdown of Photorealism. We are going to teach you the physics of light, the language of camera lenses, and the negative prompts required to banish “bad anatomy” forever.

The “Realism” Formula

To achieve true photorealism in Stable Diffusion, follow this prompt structure:[Subject Description] + [Camera Angle & Lens] + [Lighting Condition] + [Skin Texture Keywords] + [Film Stock/Style].

- Crucial Keyword: Always include “raw photo,” “8k uhd,” “pores,” and “hyperdetailed” to force the model to render skin imperfections rather than smooth plastic.

Phase 1: The Anatomy of a Perfect Portrait Prompt

Most beginners fail because they focus on the who and forget the how. Stable Diffusion needs to know how the “photo” was taken.

1. The Subject (Who)

Be specific. “A woman” is weak.

- Better: “A 25-year-old Norwegian woman with freckles, messy braided hair, and striking blue eyes.”

- Pro Tip: Adding specific nationalities or ages helps the AI avoid the generic “AI face” syndrome.

2. The Lens (The Eye)

The camera lens dictates how the face looks.

- 85mm or 100mm: The gold standard for portraits. It flattens the features slightly, making the face look natural.

- 35mm: Includes more background/environment.

- f/1.8 or f/2.8: Creates “Bokeh” (blurry background), which separates the subject and screams “professional photography.”

3. The Lighting (The Mood)

Bad lighting kills realism.

- Rembrandt Lighting: Dramatic, moody, triangle of light on the cheek.

- Golden Hour: Warm, soft sunlight (great for outdoor shots).

- Softbox / Studio Lighting: Clean, even light (great for fashion/LinkedIn style).

If you are interested in applying these lighting principles to fashion sketches specifically, check out our guide on best AI fashion sketch to image tools, where lighting plays a massive role in fabric rendering.

Phase 2: The “Skin Texture” Secret

The dead giveaway of AI art is “perfect skin.” Real humans have pores, peach fuzz, and discoloration. You must explicitly ask for these defects.

Keywords to Add:

“Detailed skin texture, visible pores, skin imperfections, hyperrealistic skin, subsurface scattering, peach fuzz, raw photo.”

Subsurface Scattering is a technical term for how light penetrates translucent skin. Adding this keyword makes ears and cheeks glow red when backlit, adding a massive layer of realism.

Phase 3: Copy-Paste Prompts for Every Scenario

Here are tested Stable Diffusion prompts for realistic portraits that you can copy, paste, and tweak.

Scenario A: The Cinematic Outdoor Shot

This prompt focuses on natural light and environmental depth.

Prompt:“Raw photo, a close-up portrait of a rugged 40-year-old man with a beard, wearing a flannel shirt, standing in a forest, cinematic lighting, golden hour, sun flare, shot on Sony A7R IV, 85mm lens, f/1.8, extremely detailed face, skin pores, sweat droplets, intense gaze, 8k uhd, dslr, soft bokeh background.”

Scenario B: The High-End Studio Fashion

This looks like a cover of Vogue.

Prompt:“Studio portrait of an elegant woman, platinum blonde bob cut, wearing haute couture, dramatic studio lighting, rim lighting, dark grey background, shot on Hasselblad X1D, 100mm, sharp focus, hyperrealistic, makeup texture, high fashion photography, detailed iris.”

For more on creating high-fashion assets, refer to our comparison of specialized tools in WearView vs VModel AI.

Scenario C: The “Candid” Street Photo

This is the hardest style to pull off—making it look accidental.

Prompt:“Candid snapshot, street photography, a laughing woman in a coffee shop, motion blur, slightly out of focus background, neon lights reflection, shot on Fujifilm XT-4, 35mm, film grain, high ISO, imperfect framing, authentic emotion, casual clothes.”

Phase 4: The Negative Prompt (The Safety Net)

In Stable Diffusion, what you don’t ask for is as important as what you do. The “Negative Prompt” box is where you tell the AI what to avoid.

The Universal Realistic Negative Prompt:

“cartoon, anime, 3d render, painting, drawing, plastic skin, smooth skin, doll, blurred, low quality, bad anatomy, ugly, disfigured, extra limbs, fused fingers, bad eyes, cross-eyed, watermark, text, signature, makeup, lipstick (if male).”

If you are struggling with creating outlines for your own prompt strategies, you can adapt our ChatGPT prompts for writing blog posts methodology—using the “Chain of Thought” to plan your image prompts before generating them.

Phase 5: Technical Settings (SDXL / SD 1.5)

Even a great prompt fails with bad settings. In 2026, here is the standard config for realism.

1. Sampling Steps

- 20-30 Steps: Usually sufficient for SDXL Turbo models.

- 40-60 Steps: Better for older models or extreme detail, though diminishing returns kick in after 50.

2. CFG Scale (Creativity vs. Obedience)

- Keep it between 5 and 7.

- If you go too high (like 12), the image “burns” and colors become oversaturated.

- If you go too low (like 2), the image becomes blurry and ignores your prompt.

3. Image Resolution

- SD 1.5: 512×768 (Portrait). Never do square 512×512 for portraits; it cuts the head.

- SDXL: 1024×1024 or 896×1152. SDXL is native to higher resolutions.

Phase 6: Choosing the Right Model (Checkpoint)

The base Stable Diffusion model is a “Jack of all trades.” For portraits, you need a specialist model (Checkpoint).

- Juggernaut XL: The current king of photorealism. It has a heavy bias towards realistic lighting and skin texture.

- Realistic Vision (V6/V7): Excellent for consistent faces, though sometimes leans a bit towards “pretty” rather than “gritty.”

- CyberRealistic: Great for balancing realism with stylized versatility.

Comparison:

While Stable Diffusion offers granular control, some users prefer the “one-click” beauty of Midjourney. To see how the other side lives, read our Midjourney v7 release date and features review. Midjourney is easier, but Stable Diffusion gives you the controls of the cockpit.

Phase 7: Moving Beyond Static Images

Once you have mastered the realistic portrait, the next step is animation. The 2026 AI landscape allows you to take these generated portraits and make them talk or move.

- Video Generation: You can feed your realistic portrait into a video model to create a cinematic clip. Learn more about the leading video tech in our OpenAI Sora release date and features update.

- Mobile Editing: If you are generating on the go, the new Apple Intelligence features allow for basic “Clean Up” and editing directly in your iPhone Photos app, perfect for fixing small AI artifacts.

Conclusion: Practice Makes Perfect

Mastering Stable Diffusion prompts for realistic portraits is about observation. Go look at real photography. Notice how light hits the nose. Notice how the background blurs.

Then, describe that to the machine.

Don’t settle for “plastic.” Push the model with keywords like “raw,” “grain,” and “imperfection.” The beauty of realistic AI art lies in the flaws.

For more tools to expand your creative stack, browse our AI Tools category to find the best upscalers and editors to finish your masterpieces.

Frequently Asked Questions (FAQs)

1. Why do my Stable Diffusion portraits look like plastic?

This usually happens because you haven’t specified skin texture. The AI defaults to “smooth” to look pretty. You must add keywords like “detailed skin,” “pores,” “raw photo,” and “grain” to force it to render imperfections. Also, ensure you are using a realistic checkpoint like Juggernaut or Realistic Vision.

2. What is the best resolution for portraits in Stable Diffusion?

For SD 1.5 based models, use 512×768 (Vertical). For SDXL based models, use 896×1152 or 1024×1024. Vertical aspect ratios generally produce better framing for human heads than horizontal or square ratios.

3. Can I use celebrity names in my prompts?

Yes, using celebrity names (e.g., “a mix of Brad Pitt and George Clooney”) is a powerful way to control facial structure. However, be aware of ethical considerations and the terms of service of the platform you are using.

4. What is “High Res Fix” and should I use it?

Yes! Hires. Fix is a setting in Automatic1111/Forge that generates the image at a low resolution and then upscales it immediately. This adds massive amounts of detail to eyes and hair that simply cannot exist at lower resolutions. It is essential for “close-up” realism.

5. How does Stable Diffusion compare to DALL-E 3?

DALL-E 3 (inside ChatGPT) is better at following complex instructions but often refuses to generate photorealistic “real people” due to safety filters. Stable Diffusion has no filters and offers far more control over lighting and camera settings. You can compare the ecosystem differences in our Google Gemini vs ChatGPT-4o comparison.

6. What does the “weight” of a prompt mean?

You can emphasize words by using brackets. For example, (blue eyes:1.5) tells the AI that blue eyes are 1.5x more important than the other words. This is useful if the AI is ignoring specific details of your portrait.

7. Do I need a powerful PC for this?

Running SDXL locally requires a good GPU (NVIDIA RTX 3060 or better recommended). However, you can run it on cloud services or use optimized “Turbo” models if you have weaker hardware. For hardware-level AI news, check out our Elon Musk Grok AI update to see where compute power is heading.